As a website owner, you should know that not every website visitor is human. Technology has advanced to a stage where developers can write lines of code to create bots that have the ability to perform tasks without human supervision. So how to block bad bots?

In fact, a report by Statista showed that in 2021, there was about 42% bot web traffic worldwide. The word, bots, usually come off as bad or negative whenever people hear it. However, it’s important to note that there are good bots, like those created by Google and Bing, to help index your website so that potential customers can find your business.

However, there are also bad bots on the internet that can negatively impact your websites and, ultimately, your business. In this article, we’ll be going into detail about bots, how they affect your website, and tips to block bad bots.

What Are Bots?

Bots, short for robots, are software programs that perform repetitive and automated tasks as part of another computer program or simulated human activity. The nature of their design means they don’t require any human assistance to carry out their functions. Their tasks are highly repetitive and can be completed faster, more reliably, and more accurately than humans.

There are different types of bots available presently. Some of them include:

1. Chatbots

Chatbots imitate human conversations using pre-programmed responses. They are mostly used for customer service purposes, reducing the need for human support.

FURTHER READING: |

1. 5 Best AI Chatbot Platforms to Use |

2. 8 Best AI Chatbot Smartest AI Chatbot |

3. 9 Open Source Chatbot Frameworks to Use |

4. Create Chat App in Meteor in 40 Minutes |

2. Web crawlers

Bots that scan content on webpages on the Internet, indexing the information on search engines.

Web crawlers, also referred to as web spiders, web bots, or web robots, are automated programs or scripts specially designed to browse and index the content of websites available on the internet. They serve as a fundamental component of search engines like Google, Bing, and others. The primary function of web crawlers is to systematically scan web pages, follow hyperlinks, and collect information from diverse websites to construct an index of the internet’s vast content.

The process of web crawling encompasses the following steps:

- Starting Point: The web crawler initiates its exploration from a predetermined list of URLs, commonly known as seeds or starting points.

- Retrieval: The crawler requests the content of a web page from the web server by sending an HTTP request.

- Parsing: After obtaining the content, the crawler parses the HTML or other data formats to extract pertinent information, such as text, images, links, and metadata.

- Link Extraction: Web crawlers identify and extract hyperlinks present on the web page, adding them to a list of URLs to be visited later.

- URL Frontier: The list of URLs to be visited, often called the URL frontier, functions as a queue or priority list for the crawler, guiding it to explore more web pages.

- Crawling and Indexing: The process continues iteratively as the web crawler follows links from one page to another, visiting new pages and extracting information to create an index of the content found on various websites.

Web crawlers play a vital role in the functionality of search engines, enabling them to index vast amounts of web content and deliver relevant search results to users when they seek specific information. Additionally, web crawlers find applications in various other areas, including data scraping, website monitoring, and gathering data for research and analysis.

3. Social bots

These bots massively operate on different social media channels carrying out various tasks.

4. Malicious bots

Malicious bots are those cybercriminals use to carry out illegal activities like stealing private information or distributed denial of service (DDoS) attacks. Malicious bots could also be those that violate a website’s robot.txt rules.

Good Bots

Bots cannot be inherently classified as good or bad. However, they can be used with good or bad intent.

A good bot is one that executes a helpful or useful task for your business or website visitors. They are not created with bad intentions. It normally does not damage or worsen the user experience of the websites it crawls.

Good bots typically belong to a reputable company. It respects the website owner’s rules that regulate the frequency at which website bots should index and crawl a website. These regulations are normally stated in the website’s robots.txt file.

A good bot is programmed to search for that file, study it, and follow its rules before executing any task. There are different types of good bots including:

- Chatbots, e.g., Googlebot, Bingbot, Yandexbot

- Social bots, e.g., Pinterest crawler and Facebook crawler

- Marketing bots, e.g., SEMrush bots, and Ahrefs bots

- Site monitoring bots, e.g., Uptime bots and WordPress pingbacks

- Voice engine bots e.g., Alexa’s crawler and Applebot

These good bots are useful and helpful for your site, and ultimately, your business. However, this doesn’t mean you should permit every good bot on your website. Good bots take up bandwidth and can slow down your site’s speed. Allow only the necessary bots by defining them on your robot.txt file.

Bad bots

Bad bots are those that violate a website’s terms of service and its robot.txt rules. They operate in a way that negatively impacts a website. Bad bots are usually created by scammers, cybercriminals, or other individuals involved in illegal activities. Bad bots either don’t read or simply ignore the rules in the robots.txt file.

Hackers may also use botnets for some types of attacks, such as DDoS. A botnet refers to a group of devices (such as personal computers or IoT devices) infected with malware and is now under the attacker’s control, turning them into zombie devices.

Why Are Bad Bots So Dangerous to Your Business?

Bad bots are evolving and have become smarter than ever. Increasingly they’re simulating real human workflows across websites to “act” like real users. Thus, it has become quite tricky for a bot blocker to get rid of them. Below are some ways bad bots can hurt your business.

1. Content/price scraping

These are bots that illegally copy content from your websites, like product descriptions or prices, and post the stolen content to another site without your knowledge or permission. This is a form of data scrapping. Content scraping bots can drive traffic to other websites by scraping high-quality, keyword-dense content from other sites.

2. Account takeover

Account takeover (ATO) is an automated attack where cybercriminals compromise online accounts, usually gaining access through bad bots, such as credential stuffing or credential cracking. ATO can lead to data leaks and customers’ personal data being compromised. This can badly affect your business and may even lead to legal troubles.

3. Account creation

Bad bot account creation is the process of creating accounts using fake or stolen identity information. In this type of attack, bad actors use automated bots to create a large number of fake accounts within a short period.

For example, cybercriminals can use fake accounts to bomb review sites with excellent reviews of their product while simultaneously adding negative reviews about a competing product.

4. Skew analytics

Heavy and unauthorized bot traffic can negatively affect analytics metrics, including session duration, bounce rate, page views, geolocation of users, and conversions. It is quite challenging to gauge the performance of a site overrun by bot activity. This can lead to metric deviations that can be very frustrating for the site owner.

The statistical noise bots produce also undermines efforts to improve the site, such as A/B testing and conversion rate optimization. Ad publishers might even charge much more for the ad space because they assume you have increased traffic.

5. Checkout and application abuse bots

These kinds of bots are extremely sophisticated and utilized for various malicious intentions. They are frequently used in e-commerce to manipulate prices and purchase lower-cost goods and services. Similar bots are employed to attack decentralized exchanges and influence cryptocurrency prices.

All of the above-listed bots have the ability to harm any form of business, even to the point of permanently ending the business. It becomes imperative for business owners to look for ways to protect their websites.

Ways to Block Bad Bots and Protect Your Business

Below are some practical ways you can protect your business from bad bots.

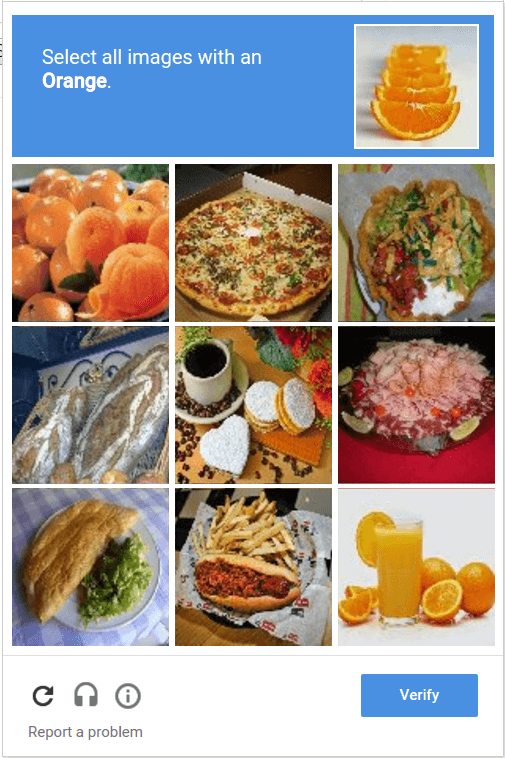

1. Challenging bad bots with CAPTCHA

CAPTCHA (Completely Automated Public Turing test to tell Computers and Humans Apart) is a type of security measure known as challenge-response authentication. It asks users to complete a simple test proving they are human and not a bot or computer trying to access an endpoint (link) or as a second step to accessing a password-protected account. CAPTCHA test is available in Text-based, picture-based, and sound-based types.

Recommended reading: Are Mobile Wallets Secure and Should I Use It?

A CAPTCHA test comprises two components; a text box and a random sequence of letters and/or numbers that appear as a warped image. To pass a test and prove your human identity, simply type the characters you see in the image into the text box.

Business owners can challenge the bot with a CAPTCHA or with invisible tests like suddenly asking the client to move the mouse cursor in a certain way, which will be very difficult to solve by a bot.

However, nowadays, hackers can use workarounds to bypass these bot blockers and challenges. They can use CAPTCHA farm services that allow hackers to pass the CAPTCHA to a human employee to solve before passing it back to the bot.

2. Honeypot trapping

Honeypot trapping is a security technique for attracting bots/attackers, so they can be studied to improve your application or website security policies.

These traps can target bots that try to inject fake email addresses into an email collection form, hackers who try to scrape email addresses from a web page, or harvesters that collect personal data like bank account details. Alternatively, the bot can be redirected to another page that is visually similar but has illegitimate/fake content in it.

Honeypoint trapping helps in replicating how vulnerabilities behave in a system by drawing an attacker to a certain endpoint. Hence, you can understand a bot/attacker’s behavior patterns, as any attempt to communicate with the endpoint is considered suspicious and easily flagged.

Recommended reading: What Is App Security and How to Make It Right in 2022?

3. Bandwidth/Rate limiting

As bots improve at imitating human behavior, bandwidth/rate limiting is a strategy to stop them. In this strategy, a website visitor will require a signature or fingerprint-like an IP address, Browser and Operating System versions, or location. This signature or fingerprint can be cross-checked over a database of identified bots in the past and blocked.

Suppose there are too many requests to access your website within a specified time frame. In that case, the rate-limiting solution will not fulfill the IP address’s requests for a certain amount of time.

These bots could also be allowed to access the site, slowing down its bandwidth allocation to make the bot operation much less efficient. This method is efficient at stopping brute force attacks in which a bot tries thousands of different passwords in order to guess the correct one and break into the account like in the case of DDoS attacks (a malicious attempt to disrupt the normal traffic of a targeted server, service, or network by flooding it or its surrounding infrastructure with Internet traffic and Web scraping).

4. Regular updates

You must keep your website and every single integration updated. Ensure that you always stay current with new releases. This is because, sometimes, bots can gain access to your website via an older version. Also, software providers constantly update their security systems to favor business owners.

5. Upgrade your hosting

Bad bots target unstable web hosting infrastructures, and that’s why it’s important to ensure your hosting provider is reliable, secure, and has the necessary bot protection in place. If you’re using WordPress, for example, ensure you use a hosting solution such as Cloudways that protects against traffic congestion caused by malicious bots, brute-force attacks, and DDoS.

Stay Proactive

It is now clear to see how blocking bad bots can be helpful for your businesses. Business/website owners need to treat bad bot blocking with a high priority. Also, it’s essential to note that it’s not a one-off effort. There’s a need to constantly watch out for these bad bots and block them instantly. A bot management service may offer a long-term solution.